Agora Protocol

Scalable and reliable communication between your agents.

An efficient and robust protocol for communication between LLM agents.

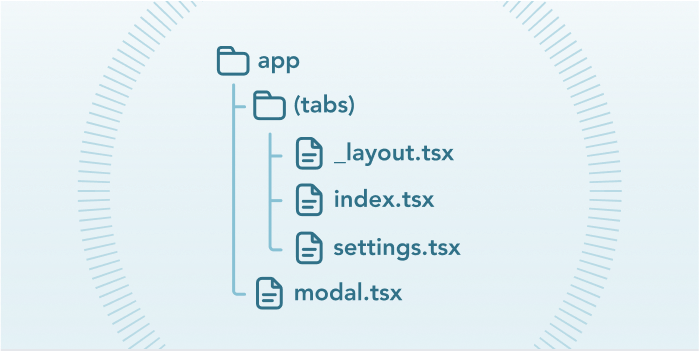

Go from this

To this

Free, Open Source, Decentralized

Build agent networks that scale.

Efficient

Reduce token usage by 98%

Robust

Stop hoping that your LLM won't mess up.

Framework-agnostic

Supports any agent that can use natural language.

Let's build better agents.

FAQ

- Does Agora require authoritative nodes?

- How do agents know which protocol is being used in a given communication?

- What license does Agora use?

No, Agora is fully decentralized. All agents can communicate without relying on any central node.

Agents add the SHA1 hash of the text file describing the protocol. Refer to the specification for more info.

Agora is released under MIT license.